Voice Assistants for Health Self-Management: Designing for and with Older Adults

Amama Mahmood, Shiye Cao, Maia Stiber, Victor Antony, and Chien-Ming Huang “Voice Assistants for Health Self-Management: Designing for and with Older Adults”, ACM Conference on Human Factors in Computing Systems (CHI), 2025.

Abstract

Supporting older adults in health self-management is crucial for promoting independent aging, particularly given the growing strain on healthcare systems. While voice assistants (VAs) hold the potential to support aging in place, they often lack tailored assistance and present usability challenges. We addressed these issues through a five-stage design process with older adults to develop a personal health assistant. Starting with in-home interviews (N=17), we identified two primary challenges in older adult's health self-management: health awareness and medical adherence. To address these challenges, we developed a high-fidelity LLM-powered VA prototype to debrief doctor’s visit notes and generate tailored medication reminders. We refined our prototype with feedback from co-design workshops (N=10) and validated its usability through in-home studies (N=5). Our work highlights key design features for personal health assistants and provides broader insights into desirable VA characteristics, including personalization, adapting to user context, and respect for user autonomy.

Xpress: Generating Dynamic, Context-Aware Robot Facial Expressions Using Language Models

Victor Antony, Maia Stiber, and Chien-Ming Huang, “Xpress: Generating Dynamic, Context-Aware Robot Facial Expressions Using Language Models,” ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2025.

Abstract

Facial expressions are vital in human communication and significantly influence outcomes in human-robot interaction (HRI), such as likeability, trust, and companionship. However, current methods for generating robotic facial expressions are often labor-intensive, lack adaptability across contexts and platforms, and have limited expressive ranges—leading to repetitive behaviors that reduce interaction quality, particularly in long-term scenarios. We introduce Xpress, a system that leverages language models (LMs) to dynamically generate context-aware facial expressions for robots through a three-phase process: encoding temporal flow, conditioning expressions on context, and generating facial expression code. We demonstrated Xpress as a proof-of-concept through two user studies (n=15 x 2) and a case study with children and parents (n=13), in storytelling and conversational scenarios to assess the system’s context-awareness, expressiveness, and dynamism. Results demonstrate Xpress's ability to dynamically produce expressive and contextually appropriate facial expressions, highlighting its versatility and potential in HRI applications.

“Uh, This One?”: Leveraging Behavioral Signals for Detecting Confusion during Physical Tasks

Maia Stiber, Dan Bohus, and Sean Andrist, “‘Uh, This One?’: Leveraging Behavioral Signals for Detecting Confusion during Physical Tasks,” ACM International Conference on Multimodal Interaction (ICMI), 2024.

Abstract

A longstanding goal in the AI and HCI research communities is building intelligent assistants to help people with physical tasks. To be effective in this, AI assistants must be aware of not only the physical environment, but also the human user and their cognitive states. In this paper, we specifically consider the detection of confusion, which we operationalize as the moments when a user is “stuck” and needs assistance. We explore how behavioral features such as gaze, head pose, and hand movements differ between periods of confusion vs no-confusion. We present various modeling approaches for detecting confusion that combine behavioral features, length of time, instructional text embeddings, and egocentric video. Although deep networks trained on full video streams perform well in distinguishing confusion from non-confusion, simpler models leveraging lighter weight behavioral features exhibit similarly high performance, even when generalizing to unseen tasks.

Poster: Link

Flexible Robot Error Detection Using Natural Human Responses for Effective HRI

Maia Stiber, “Flexible Robot Error Detection Using Natural Human Responses for Effective HRI,” Companion of the ACM/IEEE International Conference on Human Robot Interaction (HRI), 2024.

Abstract

Robot errors during human-robot interaction are inescapable; they can occur during any task and do not necessarily fit human expectations. When left unmanaged, robot errors harm task performance and user trust, resulting in user unwillingness to work with a robot. Existing error detection techniques often specialize in specific tasks or error types, using task or error specific information for robust management and so may lack the versatility to appropriately address robot errors across tasks and error types. To achieve flexible error detection, my work leverages natural human responses to robot errors in physical HRI for error detection across task, scenario, and error type in support of effective robot error management.

Poster: Link

Forging Productive Human-Robot Partnerships via Group Formation Exercises

Maia Stiber, Yuxiang Gao, Russell Taylor, and Chien-Ming Huang “Forging Productive Human-Robot Partnerships via Group Formation Exercises,” ACM Transactions on Human Robot Interaction, 2024.

Abstract

Productive human-robot partnerships are vital to successful integration of assistive robots into everyday life. While prior research has explored techniques to facilitate collaboration during human-robot interaction, the work described here aims to forge productive partnerships prior to human-robot interaction, drawing upon team building activities' aid in establishing effective human teams. Through a 2 (group membership: ingroup and outgroup) x 3 (robot error: main task errors, side task errors, and no errors) online study (N=62), we demonstrate that 1) a non-social pre-task exercise can help form ingroup relationships; 2) an ingroup robot is perceived as a better, more committed teammate than an outgroup robot (despite the two behaving identically); and 3) participants are more tolerant of negative outcomes when working with an ingroup robot. We discuss how pre-task exercises may serve as an active task failure mitigation strategy.

On Using Social Signals to Enable Flexible Error-Aware HRI

Maia Stiber, Russell Taylor, and Chien-Ming Huang “On Using Social Signals to Enable Flexible Error-Aware HRI” ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2023.

Abstract

Prior error management techniques often do not possess the versatility to appropriately address robot errors across tasks and sce- narios. Their fundamental framework involves explicit, manual error management and implicit domain-specific information driven error management, tailoring their response for specific interaction contexts. We present a framework for approaching error-aware systems by adding implicit social signals as another information channel to create more flexibility in application. To support this notion, we introduce a novel dataset (composed of three data collections) with a focus on understanding natural facial action unit (AU) responses to robot errors during physical-based human-robot interactions—varying across task, error, people, and scenario. Analysis of the dataset reveals that, through the lens of error detection, using AUs as input into error management affords flexibility to the system and has the potential to improve error detection response rate. In addition, we provide an example real-time interactive robot error management system using the error-aware framework.

Human Response to Robot Errors in HRI Dataset: https://github.com/intuitivecomputing/Response-to-Errors-in-HRI-Dataset

Example Error-Aware Robotic System: https://github.com/intuitivecomputing/Error-Aware-Robotic-System

Effective Human-Robot Collaboration via Generalized Robot Error Management Using Natural Human Responses

Maia Stiber, “Effective Human-Robot Collaboration via Generalized Robot Error Management Using Natural Human Responses,” International Conference On Multimodal Interaction, 2022.

Abstract

Robot errors during human-robot interaction will be unavoidable. Their impact on the collaboration is dependent on the human's perception of it and how timely it is detected and recovered from. Prior work in robot error management often uses task or error specific information for reliable management and so is not generalizable to other contexts or error types. To achieve generalized error management, one approach is the use of human response as input. My PhD thesis will focus on enabling effective human-robot interaction through leveraging users’ natural multimodal response to errors to detect, classify, mitigate, and recover from them. This extended abstract details my past, current, and future work towards this goal.

Modeling Human Response to Robot Errors for Timely Error Detection

Maia Stiber, Russell Taylor, and Chien-Ming Huang “Modeling Human Response to Robot Errors for Timely Error Detection,” International Conference on Intelligent Robots and Systems (IROS), 2022.

Abstract

In human-robot collaboration, robot errors are inevitable—damaging user trust, willingness to work together, and task performance. Prior work has shown that people naturally respond to robot errors socially and that in social interactions it is possible to use human responses to detect errors. However, there is little exploration in the domain of non- social, physical human-robot collaboration such as assembly and tool retrieval. In this work, we investigate how people’s organic, social responses to robot errors may be used to enable timely automatic detection of errors in physical human-robot interactions. We conducted a data collection study to obtain facial responses to train a real-time detection algorithm and a case study to explore the generalizability of our method with different task settings and errors. Our results show that natural social responses are effective signals for timely detection and localization of robot errors even in non-social contexts and that our method is robust across a variety of task contexts, robot errors, and user responses. This work contributes to robust error detection without detailed task specifications.

Designing User-Centric Programming Aids for Kinesthetic Teaching of Collaborative Robots

Gopika Ajaykumar, Maia Stiber, and Chien-Ming Huang, “Designing User-Centric Programming Aids for Kinesthetic Teaching of Collaborative Robots,” Robotics and Autonomous Systems 145, 2021.

Abstract

Just as end-user programming has helped make computer programming accessible for a variety of users and settings, end-user robot programming has helped empower end-users without specialized knowledge or technical skills to customize robotic assistance that meets diverse environmental constraints and task requirements. While end-user robot programming methods such as kinesthetic teaching have introduced direct approaches to task demonstration that allow users to avoid working with traditional programming constructs, our formative study revealed that everyday people still have difficulties in specifying effective robot programs using these methods due to challenges in understanding robot kinematics and programming without situated context and assistive system feedback. These findings informed our development of Demoshop, an interactive robot programming tool that includes user-centric programming aids to help end-users author and edit task demonstrations. To evaluate the effectiveness of Demoshop, we conducted a user study comparing task performance and user experience associated with using Demoshop relative to a widely used commercial baseline interface. Results of our study indicate that users have greater task efficiency while authoring robot programs and maintain stronger mental models of the system when using Demoshop compared to the baseline interface. Our system implementation and study have implications for the further development of assistance in end-user robot programming.

Not All Errors Are Created Equal: Exploring Human Responses to Robot Errors with Varying Severity

Maia Stiber and Chien-Ming Huang, “Not All Errors Are Created Equal: Exploring Human Responses to Robot Errors with Varying Severity,” International Conference on Multimodal Interaction Late-Breaking Report, 2020.

Abstract

Robot errors occurring during situated interactions with humans are inevitable and elicit social responses. While prior research has suggested how social signals may indicate errors produced by anthropomorphic robots, most have not explored Programming by Demonstration (PbD) scenarios or non-humanoid robots. Additionally, how human social signals may help characterize error severity, which is important to determine appropriate strategies for error mitigation, has been subjected to limited exploration. We report an exploratory study that investigates how people may react to technical errors with varying severity produced by a non-humanoid robotic arm in a PbD scenario. Our results indicate that more severe robot errors may prompt faster, more intense human responses and that multimodal responses tend to escalate as the error unfolds. This provides initial evidence suggesting temporal modeling of multimodal social signals may enable early detection and classification of robot errors, thereby minimizing unwanted consequences.

Reconstructing Sinus Anatomy from Endoscopic Video – Towards a Radiation-free Approach for Quantitative Longitudinal Assessment

Xingtong Liu, Maia Stiber, Jindan Huang, Masaru Ishii, Gregory D Hager, Russell H Taylor, and Mathias Unberath, “Reconstructing Sinus Anatomy from Endoscopic Video--Towards a Radiation-free Approach for Quantitative Longitudinal Assessment”, In Proceedings of the 2020 International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI ‘20).

Abstract

Reconstructing accurate 3D surface models of sinus anatomy directly from an endoscopic video is a promising avenue for cross-sectional and longitudinal analysis to better understand the relationship between sinus anatomy and surgical outcomes. We present a patient-specific, learning-based method for 3D reconstruction of sinus surface anatomy directly and only from endoscopic videos. We demonstrate the effectiveness and accuracy of our method on in and ex vivo data where we compare to sparse reconstructions from Structure from Motion, dense reconstruction from COLMAP, and ground truth anatomy from CT. Our textured reconstructions are watertight and enable measurement of clinically rele- vant parameters in good agreement with CT. The source code is available at https://github.com/lppllppl920/DenseReconstruction-Pytorch.

An Interactive Mixed Reality Platform for Bedside Surgical Procedures

Ehsan Azimi, Zhiyuan Niu, Maia Stiber, Nicholas Greene, Ruby Liu, Camilo Molina, Judy Huang, Chien-Ming Huang, and Peter Kazanzides, “An Interactive Mixed Reality Platform for Bedside Surgical Procedures”, In Proceedings of the 2020 International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI’20).

Abstract

In many bedside procedures, surgeons must rely on their spatiotemporal reasoning to estimate the position of an internal target by manually measuring external anatomical landmarks. One particular example that is performed frequently in neurosurgery is ventriculostomy, where the surgeon inserts a catheter into the patient’s skull to divert the cerebrospinal fluid and alleviate the intracranial pressure. However, about one-third of the insertions miss the target. We, therefore, assembled a team of engineers and neurosurgeons to develop an interactive surgical navigation system using mixed reality on a head-mounted display that overlays the target, identified in preoperative images, directly on the patient’s anatomy and provides visual guidance for the surgeon to insert the catheter on the correct path to the target. We conducted a user study to evaluate the improvement in the accuracy and precision of the insertions with mixed reality as well as the usability of our navigation system. The results indicate that using mixed reality improves the accuracy by over 35% and that the system ranks high based on the usability score.

SpiroCall: Measuring Lung Function over a Phone Call

Mayank Goel, Elliot Saba, Maia Stiber, Eric Whitmire, Josh Fromm, Eric C. Larson, Gaetano Borriello, and Shwetak N. Patel, "SpiroCall: Measuring Lung Function over a Phone Call," CHI'16, May 07-12, 2016, San Jose, CA (Honorable Mention paper award.)

Abstract

Cost and accessibility have impeded the adoption of spirometers (devices that measure lung function) outside clinical settings, especially in low-resource environments. Prior work, called SpiroSmart, used a smartphone’s built-in microphone as a spirometer. However, individuals in low- or middle-income countries do not typically have access to the latest smartphones. In this paper, we investigate how spirometry can be performed from any phone—using the standard telephony voice channel to transmit the sound of the spirometry effort. We also investigate how using a 3D printed vortex whistle can affect the accuracy of common spirometry measures and mitigate usability challenges. Our system, coined SpiroCall, was evaluated with 50 participants against two gold standard medical spirometers. We conclude that SpiroCall has an acceptable mean error with or without a whistle for performing spirometry, and advantages of each are discussed.

Deformable Registration with Statistical Shape Model

This code builds upon the previous ICP code below. Each iteration, the algorithm simultaneously updates the frame transformation between the body and CT coordinates and changes the weights of the modes of the statistical shape model using a least squares method.

You can download the code.

Iterative Closest Point Algorithm

This code runs the Iterative Closest Point algorithm using an octree search to determine the frame transformation between body and CT coordinates. This uses the code from the project below to find the closest point for each point in the body coordinate point cloud.

You can download the code.

Find Closest Point on Mesh

This code calculates the nearest point on a mesh composed of triangles. This is for registration between a body coordinate point cloud and CT scan. This code assumes that the frame transformation between body and CT coordinates is the identity matrix.

You can download the code.

Distortion Correction Pivot Calibration

This code corrects for EM tracker distortion and then conducts the pivot calibration, the frame transformation between the EM and CT coordinate system, and then calculates the pointer tip with respect to the CT coordinates system.

You can download the code.

Point Cloud/Point Cloud Registration and Pivot Calibration

This code was developed as a Programming Assignment in the Computer Integrated Surgery class taught by Professor Russell Taylor. It calculates a pivot calibration to determine the placement of a pointer tip with respect to an EM coordinate system. In addition, it inputs fiducial positions in EM tracking data and calculates a point cloud to point cloud registration between the EM and CT coordinate system.

You can download the code.

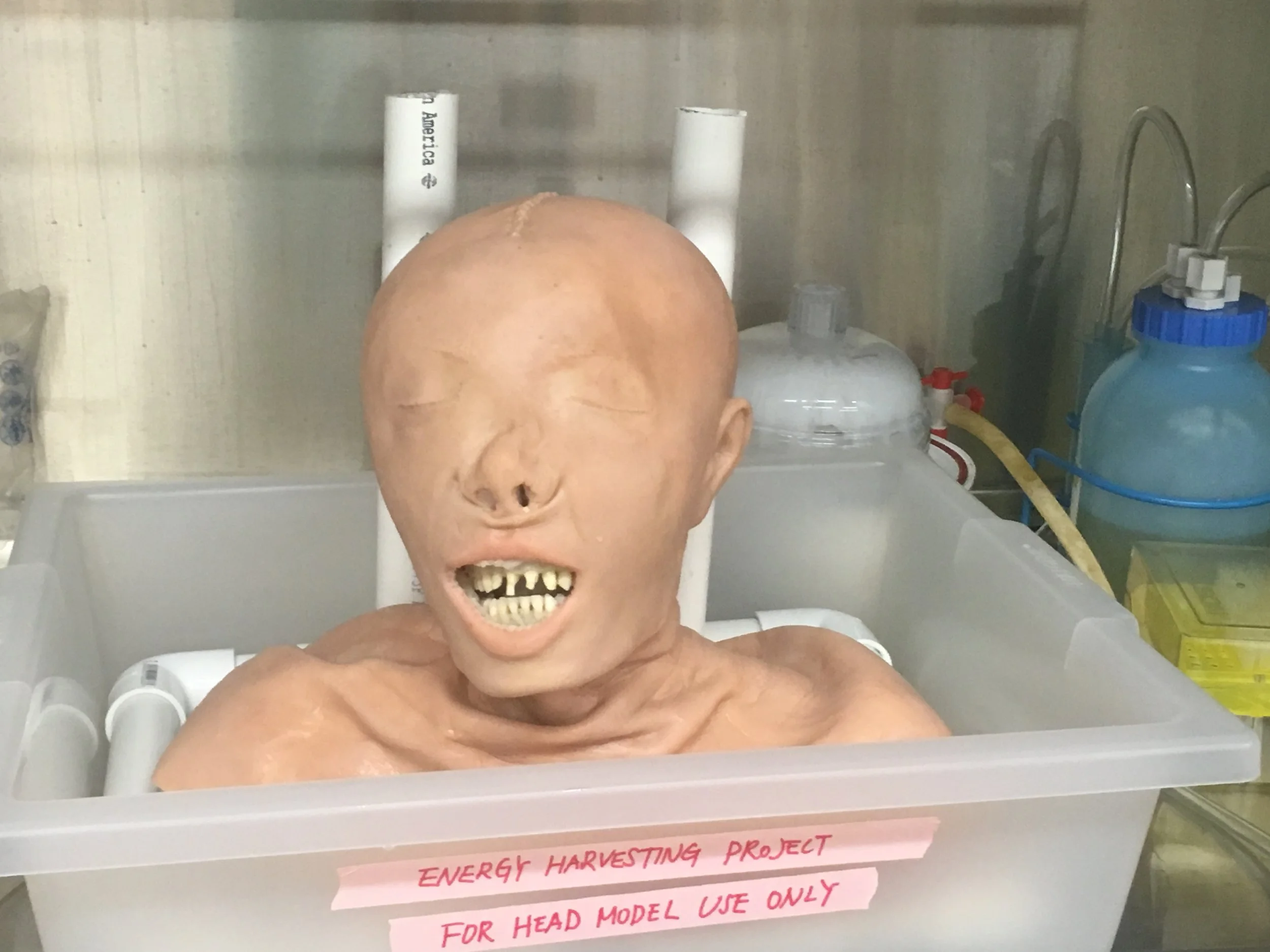

Designing Implantable Vibration Harvesters

Abstract

Advances in electronics, materials, and other fields have been leading to an increas- ing number of medical devices that are surgically implanted in patients. These devices are powered with batteries, typically implanted in the patient’s chest cavity, that are connected by wires running up to the device. With the battery pack and the wire inside the body comes considerable risk. The Choo lab seeks to minimize the risks of such devices by finding alternative methods for powering them from the body’s own natural activities, rather than by using batteries. We are researching possible sources of power that are generated by the body—the vibration of the larynx and the expansion of the chest when breathing. These harvesters were tested using an anatomical head model.